Empirical data analyses¶

Comprising the entirety of the first draft of our manuscript, what we now call “empirical data analyses” takes all of the null frameworks in question and applies them to two real-world datasets: meta-analytic activation maps from NeuroSynth and T1w/T2w data from the Human Connectome Project. Here, we briefly describe different procedures and processes applied to these datasets to generate the results shown in the manuscript.

Note that all empirical analyses can be run with the make empirical command run from the root of the repository.

Visualization of empirical results can be run with the make plot_empirical command, and supplementary analyses can be run with the make suppl_empirical command.

Preprocessing the data¶

So, to be fair, “preprocessing” here is a bit of a misnomer since we’re not actually doing any traditional neuroimaging-style preprocessing (à la fMRIPrep). However, since the only “raw” data we have right now is the HCP data downloaded in the previous step we have to do a small bit of work.

Note that data pre-processing requires both FreeSurfer and Connectome Workbench, so make sure you’ve installed all the relevant software!

Data pre-processing is run as part of the make empirical command.

NeuroSynth data processing¶

We rely on data from NeuroSynth to run a number of analyses reported in our paper.

Since we were primarily trying to replicate the analyses run in Alexander-Bloch et al., 2018, NeuroImage, we followed (or attempted to follow) their processing to the letter.

Using the NeuroSynth API we ran 123 meta-analyses for terms in the NeuroSynth database overlapping with those in the Cognitive Atlas.

It’s important to note that the original authors used only 120 terms in their analyses; however, likely due to updates in the NeuroSynth database (or potentially the Cognitive Atlas) since the original publication two years, the new intersection of the databases yielded 123 terms.

To ensure we were following Alexander-Bloch et al., the association maps generated from the meta-analyses were projected to the fsaverage5 surface using FreeSurfer’s mri_vol2surf command.

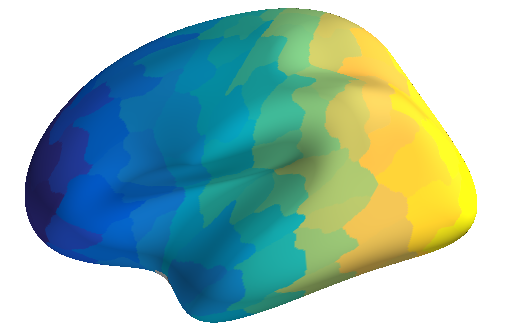

Projected data were parcellated with two multi-resolution atlases: the dMRI-derived atlas from Cammoun et al., 2012, J Neurosci Methods and the fMRI-derived atlas from Schaefer et al., 2018, Cereb Cortex. For the Cammoun atlas we used the fsaverage5 annotations generated by our lab and available on OSF; for the Schaefer atlas we used the annotations provided by the original authors.

All code for processing of NeuroSynth data can be found in scripts/empirical/fetch_neurosynth_maps.py.

(Note: we strongly encourage you use the fantastic NiMARE package in the future instead of the now-deprecated NeuroSynth API.)

HCP data processing¶

Data from HCP come in CIFTI format, so the first step of processing involved splitting the data into two GIFTI files using Connectome Workbench’s wb_command.

Then, to be equivalent with the NeuroSynth data processing pipelines, data were resampled from the fslr32k surface to the fsaverage5 surface.

Resampled data were parcellated with the Cammoun and Schaefer atlases.

All code for processing of HCP data can be found in scripts/empirical/fetch_hcp_myelin.py.

Generating distance matrices¶

While this arguably may not count as “preprocessing,” it is an important step in our analyses—and one that needs to be done before we can generate some of the null maps in the next part of our analysis! We generated both: (1) vertex-vertex, and (2) parcel-parcel distance matrices, where parcel-parcel distance matrices are generated from vertex-vertex matrices such that the distance between two parcels is the average distance between every pair of vertices in those two parcels. Distances were all generated from the pial mesh of the fsaverage5 surface.

Code for generating distance matrices can be found in scripts/empirical/get_geodesic_distance.py.

Generating the null maps¶

When we wrote the first draft of this manuscript we pre-generated null maps for all of our empirical data analyses. We did this to save on computation time, where possible; however, with subsequent developments to the implementations of a lot of the null frameworks this step became somewhat less urgent. (That is, instead of taking several days to generate null maps on-the-fly it might now only take several hours.) Still, we pre-generate where possible because: why not try and reduce the runtime of our analyses as much as possible?

Refer to the walkthrough page on spatial null models for more information on the (implementations of the) different frameworks tested in the manuscript.

Code for pre-generating null maps for our empirical anlayses can be found in scripts/empirical/generate_spin_resamples.py, scripts/empirical/generate_neurosynth_surrogates.py, and scripts/empirical/generate_hcp_surrogates.py.

Null map generation is run as part of the make empirical command.

Testing the null models¶

Once the empirical datasets are pre-processed and the null models (mostly) pre-generated, we test the performance of the nulls on the empirical datasets. Our choice of datasets and associated analytic procedures were designed to replicate and extend analyses originally reported in Alexander-Bloch et al., 2018, NeuroImage and Burt et al., 2020, NeuroImage.

Empirical analyses is run as part of the make empirical command.

Testing brain map correspondence (NeuroSynth)¶

All code for running the brain map correspondence analyses can be found in scripts/empirical/run_neurosynth_nulls.py.

Testing partition specificity (HCP)¶

All code for running the partition specificity analyses can be found in scripts/empirical/run_hcp_nulls.py.

Visualizing empirical results¶

Once the empirical analyses have been generated the results can be plotted using the command make plot_empirical.

(Note that this assumes you have already run make empirical!)

This will save out a lot of different individual plots, which we combined into the results shown in Figures 1, 4-6, and S6-7 in the manuscript.

Supplementary analyses¶

We tested a few extra things in our analyses to see how minor variations in implementation of different null models impacted the results. Namely, we examined how changing the way we define parcel centroids impacts the results of the spatial permutation null frameworks, and whether constraining geodesic travel when creating distance matrices impacts the results of the parameterized data models.

You can generate these supplementary results with the command make suppl_empirical.

(Note that this assumes you have already run make empirical!)